Authentication is hard. How to deal with it in a sane way in a cloud environment? There are two approaches to follow, each with its up and downsides, but which one is the best for your environment?

Foreword

If you’ve got more than a handful of systems, there’s the inescapable need to find a way to uniformize the authentication accross those. However, this is not an easy feat if you have the relatively common need of minimizing downtime to the maximum. So, not only you need to keep bad people out, you also need to always allow good people in, consistently. This decision should always be possible of being executed.

Therefore, you have two main approaches to this issue: centralized and distributed decision. This is a logical issue, not technological one, and is the first choice you must make to go down this path. Each comes with its own pros and cons, but there’s definetely one that fits best your environment. There’s, however, no one-size-fits-all. It ultimately depends on your personal and organizational needs.

From now on, I will present a high-quality example from each of those two domains. Those are battle-tested solutions, used by big-companies like Red Hat, Facebook, Netflix and so on. With these initial informations, I hope you will be able to at least have an initial idea of what you want, and will be able to kickstart your own research.

Also, I will be using in this article the term “authentication” to mean both authentication and authorization, as there’s no need in this article to treat differently those two problems.

Centralized authentication

Don’t be fooled by the cloud hype these days that preach absolute decentralization and promises-like behaviour everywhere. If you have legacy systems, or your objective isn’t simply serving the biggest crowd of users you can possibly imagine, distributed authentication is a problem you don’t need to have.

In fact, Microsoft proved this with its Active Directory solution. It works really well, and meet all goals of a mid/small typical organization. Yes, integration with it isn’t the biggest of its strengths, but Red Hat solved this problem in the Linux domain for sure with its own product.

I will now present this lesser known product from Red Hat: the Red Hat Identity Management, backed from the FreeIPA open-source project. It works so well that you may be forgiven if you think that is a single, monolithic solution like the Microsoft AD. It is, on the contrary, composed of smaller open-source projects and standardized protocols that are already part of every POSIX system. Hence its easiness of integration: there are already a lot of libraries and tooling ready for it, just as your legacy system can be easily instructed to use it.

Also, unlike those previous tools like a hand-maintained OpenLDAP server or a Kerberos KDC, it is freaking easy to deploy. There’s absolutely no need for, ugh, write LDIF files by hand or anything. It’s just as easy as setting up an AD domain-server, and even easier to connect clients to it.

The FreeIPA stack includes the following softwares:

- 389 LDAP server

- MIT KDC server

- ISC BIND DNS server

- NTF NTPd server

- Dogtag CA & RA server

- Apache httpd server

And all of it is centrally managed by the FreeIPA web interface, the FreeIPA CLI or the FreeIPA webservice API. There’s absolutely no need to touch directly any of those server’s config files.

Server-side

Provisioning your first FreeIPA server is a little bit different from most POSIX tools you already used, as you don’t really need to touch any config file. Basically you install the ipa-server package with your package manager and then simply call the ipa-server-install script, passing as arguments all infrastructure details you might find relevant at install phase. Aside from the main domain, you can easily change all those later with the server already up and running.

You can find a quickstart guide in the FreeIPA documentation.

From now on, almost all aspects of the FreeIPA deployment and identity content can be administered trough its web interface. Even a junior sysadmin that never touched FreeIPA before can do it. Very few advanced features may be managed trough the FreeIPA CLI, but even those are meant to be implemented in the web interface as soon as there’s developer power to do it.

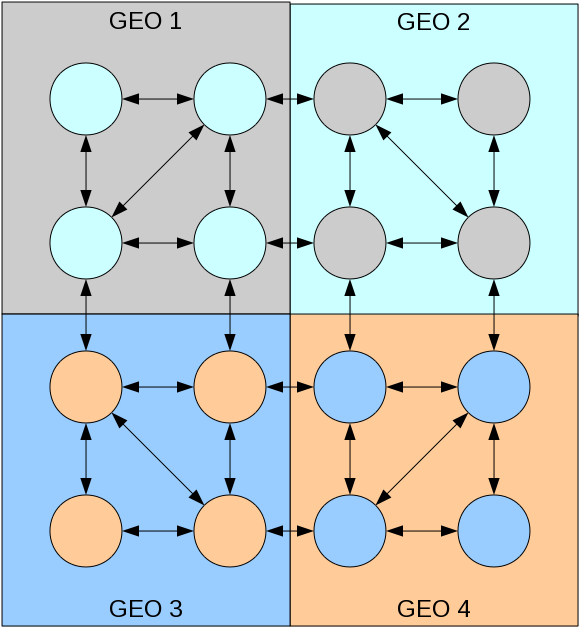

Even though the FreeIPA server is centralized by nature, it can be distributed against multiple hot-hot FreeIPA servers, providing you with failure-tolerance in a intrisically centralized infrastrucure. The FreeIPA documentation about deployment recomendations show a nice graphic visualization of the supported deployment architecture intra and inter-datacenter.

Client-side

Just as the server side, you don’t need to configure manually any of the subsystems that comprise a full-featured FreeIPA client. All you have to do is install the ipa-client package and run the ipa-client-install script with minimal arguments, since most of the configurations the scripts already discovers automatically trough the DNS SRV entries added to your DNS systems by the FreeIPA server. Then, you just reboot. Really.

You have now added to the host entry in the FreeIPA server the machine’s SSH server key, just as the FreeIPA client shim was installed in both your SSHd and SSH client on this system, enabling you to trust automatically all other hosts’s key in your infrastructure, since FreeIPA keeps a record of it. Also, all users - as long as they’re authorized to do it - can already log in this system trough SSH with their SSH keys associated to their user entry, just as they can either use a password or even a combination of those with two factor authentication. Everything out-of-the-box.

The system DNS A and PTR entries were already added to the FreeIPA DNS server, so you can reach it just by knowing it’s name. The system DNS entries are automatically updated when it boots up, so you can also use dynamic DNS leases.

If your system provides a service that uses a SSL key, you can register in FreeIPA a SSL key to be automatically provided and kept updated trough its automatic renovations, enabling you to use very short-lived keys to enhance security. Provided that the Dogtag server used by FreeIPA is also used by many comercial Certificate Authorities (CAs) around the world, you can rest assured that the implementation of this x509 scheme is sane and trusted.

Finally, you can provide Single Sign-On for both users and servers with the Kerberos service provided by FreeIPA. Once you’ve called kinit and typed your password (which may be combined with a 2FA token), then you can seamlessly log into webservices, SSH servers and every type of service that already supports Kerberos. This includes Windows. Oh yes, FreeIPA integrates with Windows clients and even can be a part of a Microsoft Active Directory domain.

This is all nicely tied up in the provisioning phase by the ipa-client-install script, and in the runtime phase by the SSSD client, so you don’t need to configure any of those manually.

Disaster situation

The big question in authentication for sysadmins, however, isn’t about all the features that a given solution brings to the table, but what happens when shit hits the fan, everything stops working and you need to act fast. SSSD handles that for you, somewhat.

SSSD, just like Windows, does credentials caching in order to authorize access to the system even if it is unable to contact the server to do it online. Also, the credential cache system acts in order the speed things up and decrease the load on server. If you already accessed the system sometime ago, chances are you be able to do it again in the event of a disaster (connection down, FreeIPA server crashed or is otherwise unavailable).

The keyword in this sentence is, however, chances. The SSSD client can’t authorize you if you already dropped out of its cache, or have never seen you. You don’t carry any authorization information with you other than your password/certificate. The server always has to reach the FreeIPA instance to ask about what you’re are or not allowed to do.

Also, this cache is wiped out every reboot, so in the event of a datacenter-wide power failure, you won’t be able to log in any of your systems before the FreeIPA server’s are up and the network is properly working.

In most companies, this can be easily accepted in face of such administration easiness. In a company like Facebook or something, this is unthinkable of.

Distributed authentication

Basically, Facebook was brought to the conclusion that using a centralized solution with their global scale, with them reaching almost a million active servers, is completely insane and much downtime-prone. Therefore, investigating quite a bit, it was possible to create a solution that met all their requirements with something that was already available off-the-shelf, but a little bit obscure so far.

I’m talking about the OpenSSH certificate system, that Marlon Dutra so brilliantly explained in the Facebook’s Code blog.

This solution provides tight security controls, high availability and reliability and even high-performance with a simple trick: you don’t need to contact a login server at all. All the information needed to authorized an access is contained in the user certificate already, that the user itself provides when it wants to log into the system, and the system being accessed simply checks that the signature is a match with the CA trusted by the system.

There’s no SPOF here. You may even login in a machine that is completely isolated from the network by any reason, since the CA certificate is contained in its image (or programatically rotated every once in a while).

Maintaining such an infrasctructure, though, is a litte cumbersome: properly organizing and keeping a secure CA infrastructure is tough and costly, with no helping hands that a solution like FreeIPA might bring. But it can be done, provided you’ve reached the point where this becomes beneficial in terms of time and cost.

Server-side

Well, there’s no server side. There’s just a secure machine that you use to generate certificates, and that may very well be offline for enhanced security. You need, however, a simple webserver to provide the Certificate Revocation List (CRL) for the certificates that were compromised or otherwise put out of use.

Even this webserver, though, don’t need to be kept up all the time. As long as a client system have a recent enough version of the list, you are completely ok. Since this list is also signed, you may very well distribute it in various points of the network or even have clients to exchange those in a peer-to-peer fashion.

What you might want is a central way to audit those accesses, like a Graylog or Hive instance, enabling you to search across chronological data and find possible abuses or invasions. OpenSSH conveniently logs all informations about the certificate used to log into the system to the journal, therefore rendering the data extraction quite a bit simple. Even though, a log-system downtime isn’t going to impact your ability to access your system: the log is delivered in a best-effort fashion.

Client-side

In the distributed solution, that where’s the magic resides. By instructing your OpenSSH server to authorize users based on their certificate’s information after checking their signature, you can carry with the user all the info you might want.

You will need to configure the client to pipe all of its logs to your logging solution, but this problem was long solved with rsyslog or more modern tools like journalbeat.

The downside is, however, that the authorization logic is partly kept in the client itself: you need a way to change this information when you find necessary, be it trough a configuration manager like Puppet or Ansible (which you should already be doing), or

- probably like Facebook does - by cycling the whole VM image.

Disaster situation

As long as the CA and CRL are kept within their expiration dates, you will be able to log into the system without help from any external service. Even completely offline.

Also, there’s no performance bottleneck even with billions of simultaneous logins, after all you have no central place to log into. If you already used a undersized Microsoft AD deployment in a college campus or big company, you know what I’m talking about.

Revoking a key is simple as adding the key sum to the CRL, sign and publish it. The revocation will be distributed as fast as you configured your clients to fetch it. Although not so instantaneous as the centralized solution, it is very plausible to do so in face of the benefits brought by this choice.

The third-way

Netflix liked a little bit of both, and developed another solution called BLESS, which runs a CA by-demand, signing very short-lived SSH certificates (like 5 minutes or so) based on business rules.

While certainly this does solves the performance issue, certainly can’t be used as the only authentication mechanish in their infrastructure, as the BLESS system itself is run in the AWS Lamba service, and we know it is too complex to be easily relied on - just as the recent outage taught us.

Afterword

If there’s one piece of advice you should take from this article, it is that you should plan well your disaster possibilities. There’s very few worse sensations than knowing you’ve locked yourself out from your systems in the middle of an outage other than maybe like… almost completely loosing all of your customer data.

However, you shouldn’t be paranoid to the point you may want to decentralize everything for the sake of it, and find yourself in a unentanglable mess of distributed logic with bad visibility.